Airtight audit trail

Bring transparency to data sharing and access on a central platform connecting teams, data, and usage.

Having full control and awareness of your data is critical. Build structure and oversight that make compliance a piece of cake.

Bring transparency to data sharing and access on a central platform connecting teams, data, and usage.

With so many moving parts, it can be hard to ensure data consistency and quality. Data health monitoring tools deliver full confidence.

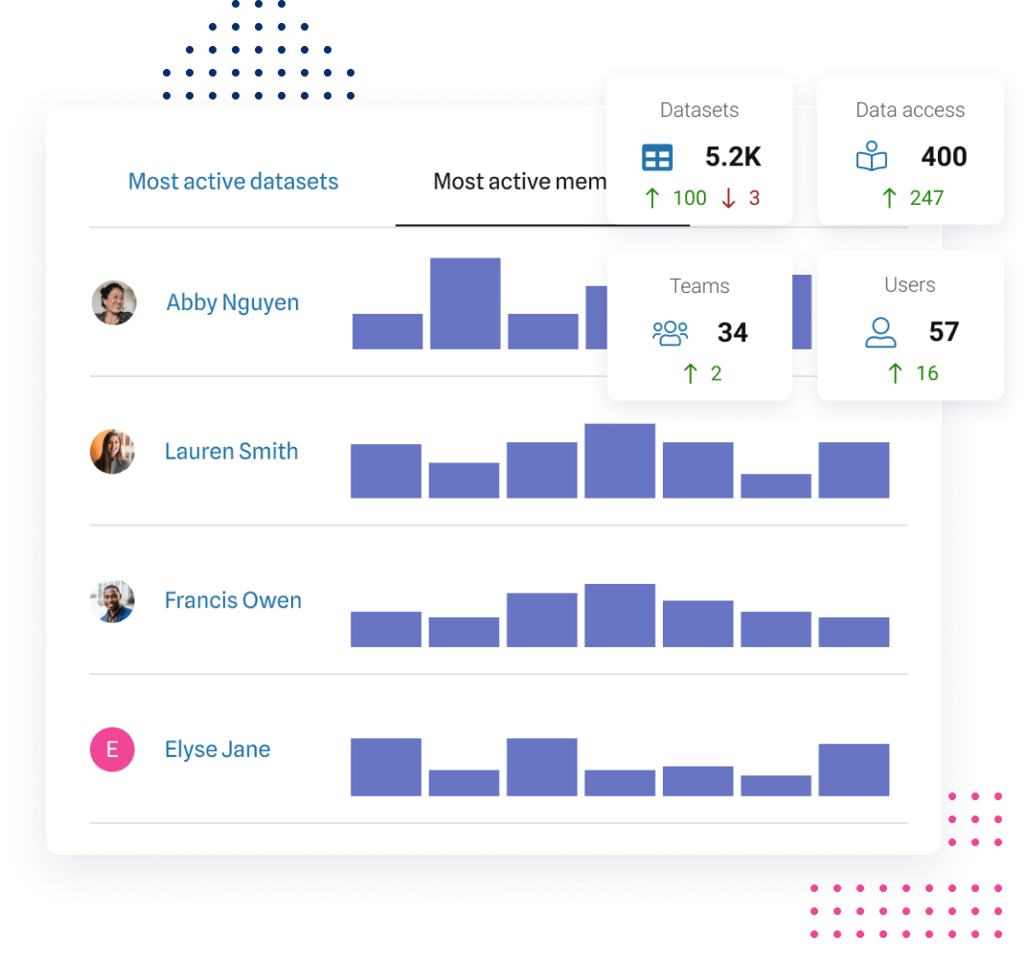

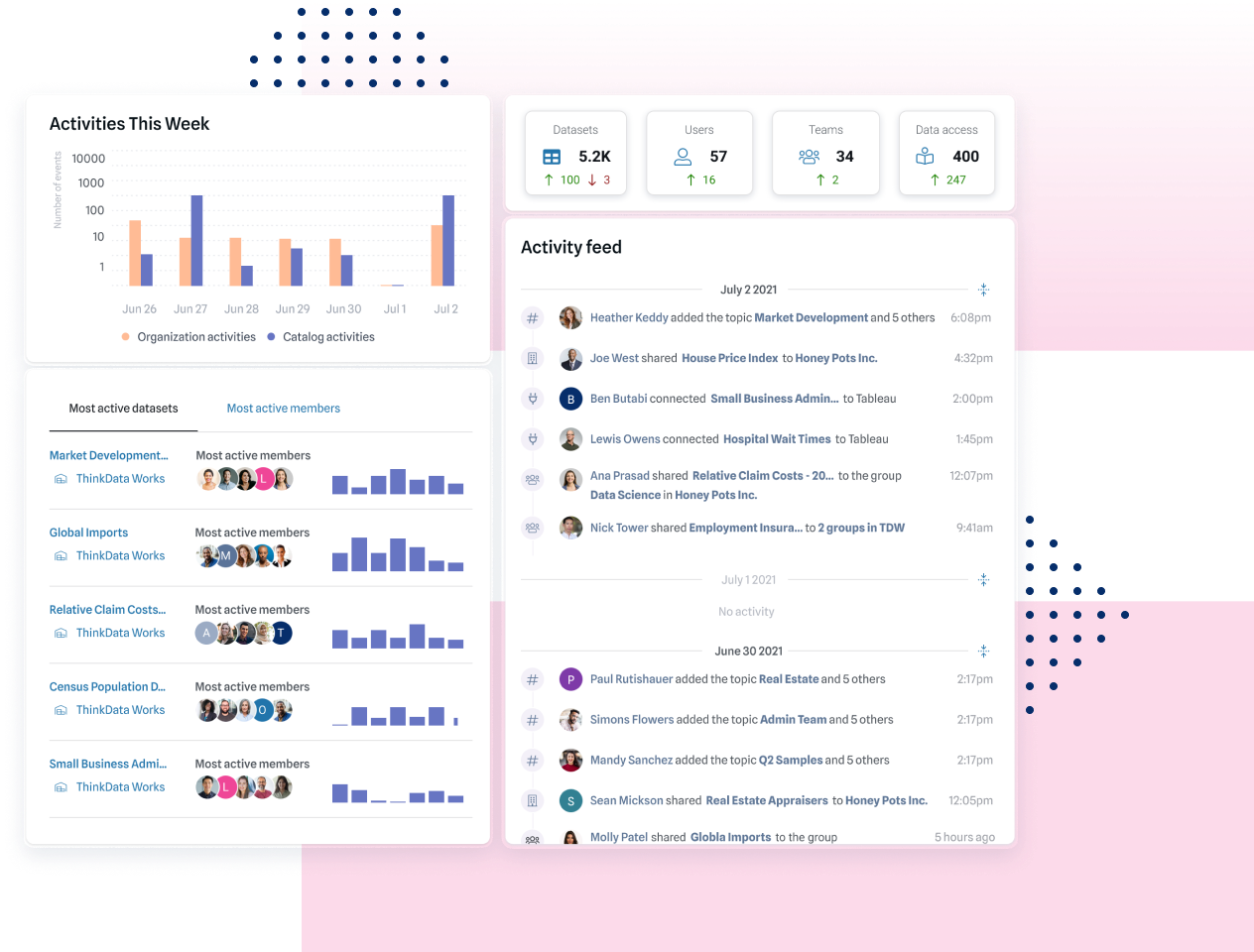

See granular details about what data is being used, and how. A connected dashboard gives you direct line-of-sight into data access.

Investing in data privacy and governance isn't just about avoiding fines. It has a proven ROI.

$4.7B

1.8x

Governance shouldn't be an obstacle — it should enable the flow of data where it needs to go in your organization.

Watch a quick demonstration of how data sharing in a catalog platform offers a way to manage data distribution at scale, no matter where it's coming from.

Government regulations for data security, data residency, and data control affect every jurisdiction. ThinkData Works lets you meet stringent requirements with ease.

Put intelligent systems in place — with a central platform and flexible data governance framework, your teams get secure, compliant access to any data.

Transparency is power. A single source of truth reduces duplicate spend, provides clear data health and update intelligence, and gives you higher confidence in any data.

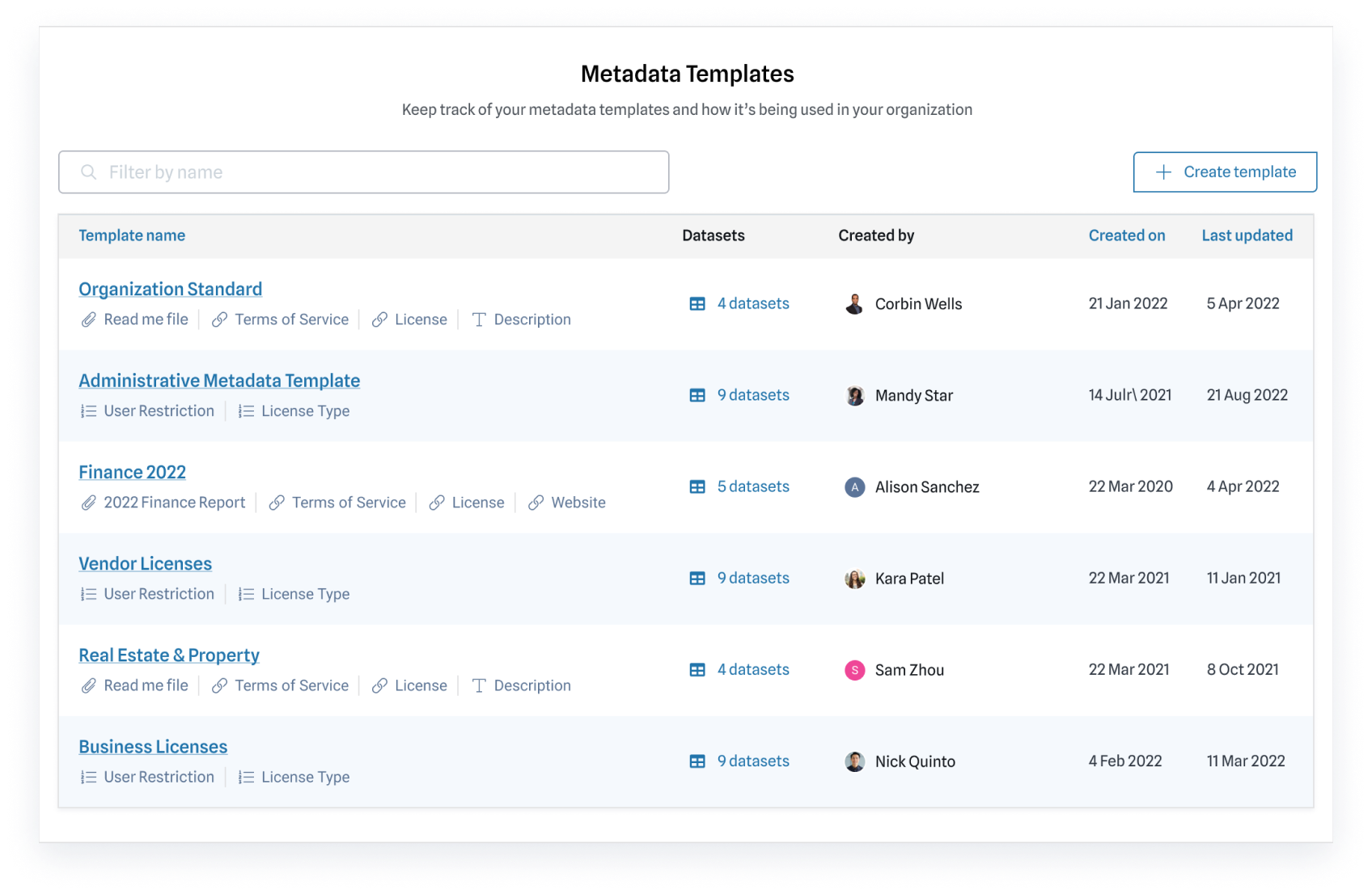

Enforcing strong governance means trackable, managed data. Grant secure access, create custom metadata, and understand how data is being used.

No two companies are the same; we offer an extensible data management platform with role-based permissions and granular access control.

Confidence in regulatory compliance and data health is just the beginning. Strong data governance lets you unlock the value of data to grow your business.

A strong data governance framework fuels innovation. See how your people and technology can come together to support your growth.

Data-driven companies depend on:

Model your org structure with role-based access controls and teams.

Fuel smarter collaboration on a secure, central platform.

Inform your strategy with metadata around data usage, access, and management.

With a strong foundation in place, you can accelerate data-driven innovation. Get in touch to see what else our end-to-end platform can do for you.